Starting with same old sequential for loop.It will iterate through a slice if integers , sum it and print.

Note : I’m running this on OS X 10.9,Core i5,4GB.Running on playground might give different time elapsed, probably 0.

//Setting concurrency to maximum

runtime.GOMAXPROCS(runtime.NumCPU())

j, k := 10, 20

var Dat []int

//Sample slice

i := 0

for i < 10300 {

Dat = append(Dat, i)

i++

}

//1.Simple for loop

Start := time.Now()

for i := range Dat {

fmt.Println(i + j + k) //Iterating through Dat slice, printing sum.(i is from Dat)

}

log.Println("Time taken for sequential : ", time.Since(Start))

Here Time taken in my Mac : 12.844223ms

Making it parallel (precisely concurrent), using function literals

//2.Full parallel loop

Start = time.Now()

for i := range Dat {

go func(i, j, k int) {

fmt.Println(i + j + k)

}(i, j, k)

}

log.Println("Time taken with Concurrent Go routines : ", time.Since(Start))

Time taken : 13.199926ms

Slight increase in time, but it will depends on hardwares.What I want to prove is simply using Go routines won’t yield maximum throughput.

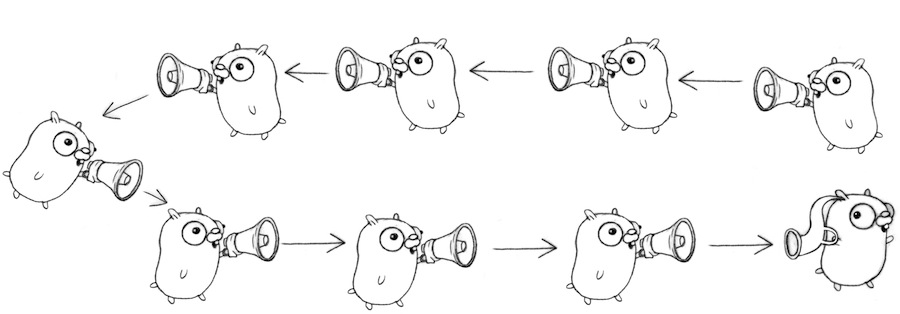

Let us move to semi parallel for loop.Concept is to divide data into chunks and use Go routines per chunks :

//Part 3 : Semi parallel loop

//3.1 Calculate index array and chunk size

//Chunk size is calculated based on how much time is taken for process single unit of data

//Here value is taken as 1000 ,for demo purpose

chSize := 1000

//Creating below index array should be dynamic based on actual array length and chunk size

DevisnResult := len(Dat) / chSize

indexes := []int{}

for i := 0; i < DevisnResult; i++ {

indexes = append(indexes, i*chSize)

}

//Actual Data slice length is 10300, index array will be like: [0 1000 2000 3000 4000 5000 6000 7000 8000 9000]

//3.2 Loop:

Start = time.Now()

for _, p := range indexes {

go func(Slc []int, j, k int) {

for i := range Slc {

fmt.Println(i + j + k)

}

}(Dat[p:p+chSize], j, k)

}

//here reminder is 300

if DevReminder := len(Dat) % chSize; DevReminder != 0 {

go func(Slc []int, j, k int) {

for i := range Slc {

fmt.Println(i + j + k)

}

}(Dat[DevisnResult*chSize:DevisnResult*chSize+DevReminder], j, k)

}

log.Println("Time taken For semi-parallel loop: ", time.Since(Start))

}

Time is 15.217us -far less than above two-

Semi parallel for loops ,which is decided dynamically (size of chunk) will get maximum throughput.If data processing requires a merging after processing data(reduce part in map reduce),usesemaphore channels and have another parallel merge function.

I’m running it on Sublime Text when log.Println() always after fmt.Priintln(),for Playground ,need scroll down result to get time printed